Smart Instruction-Following LLMs

Oct 8, 2025

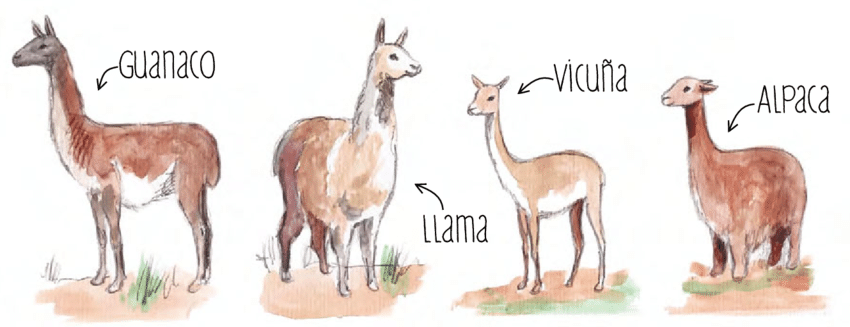

Researchers from Stanford University introduced a language model called Alpaca 7B in March 2023, which is fine-tuned from the open-sourced LLaMA 7B model. Alpaca is designed to follow instructions and has been trained on 52,000 instruction-following demonstrations.

Researchers from Stanford University introduced a language model called Alpaca 7B in March 2023, which is fine-tuned from the open-sourced LLaMA 7B model. Alpaca is designed to follow instructions and has been trained on 52,000 instruction-following demonstrations. It exhibits similar behavior to OpenAI's text-davinci-003 model, but with the added advantage of being small, easy to reproduce, and cost-effective.

Overview

Instruction-following models like Alpaca have gained significant power and are widely used by users, including for work-related tasks. However, these models still have shortcomings such as generating false information, perpetuating social biases, and producing toxic language. To address these issues, it is crucial for the academic community to engage in research on instruction-following models. However, access to powerful closed-source models like text-davinci-003 has been limited for academic researchers.

Training Process

The training of Alpaca involves two important challenges: obtaining a strong pretrained language model and high-quality instruction-following data. To address the first challenge, the researchers used Meta's LLaMA models. For the second challenge, they generated instruction-following demonstrations by building upon the self-instruct method and leveraging OpenAI's text-davinci-003. The data generation process resulted in 52,000 unique instructions and their corresponding outputs, which were obtained at a relatively low cost.

Performance Evaluation

Alpaca has undergone a preliminary evaluation to assess its performance. Human evaluators compared Alpaca 7B with text-davinci-003 on a diverse set of user-oriented instructions. The results showed that Alpaca performs at a similar level to text-davinci-003, winning slightly more comparisons. Additionally, interactive testing of Alpaca has revealed its capabilities and limitations.

Opportunities for Improvement

Like other language models, Alpaca has limitations such as hallucination (generating incorrect information), toxicity, and perpetuating stereotypes. For instance, Alpaca may provide incorrect information about the capital of Tanzania. While Alpaca can generate well-written outputs, it can also generate misinformation. The researchers acknowledge these limitations and encourage users to provide feedback to identify new failures and improve the model.

Future Directions

The researchers have released several assets related to Alpaca, including an interactive demo, the training data, the data generation process, and the training code. They plan to release the model weights in the future. They emphasize that Alpaca is intended for academic research only and should not be used for commercial purposes. Further evaluation of Alpaca, enhancing its safety, and gaining a better understanding of its capabilities and limitations needs to be done.

Other Open-Source LLMs

Vicuna 13B is another open-source AI language model that gained attention in the AI community, and is a collaborative work of UC Berkeley, CMU, Stanford, and UC San Diego researchers. It is trained on user-shared conversations and has affordable training costs.

Comparing Vicuna with Alpaca:

- Both models are open-sourced, allowing public access to their codes and weights.

- Vicuna's training is based on user-shared conversations, while Alpaca leverages self-instruction from the davinci-003 API.

- Vicuna has lesser GitHub stars than Alpaca indicating their popularity.

- GPT-4 evaluation shows that Vicuna-13B scored a perfect 10/10 in writing, while Alpaca scored 7/10, lacking in composing a requested blog post.

- Vicuna may be vulnerable to training data contamination, while Alpaca exhibits issues like hallucination, toxicity, and stereotypes.

In conclusion, choosing between Vicuna and Alpaca depends on project requirements. Vicuna's user-shared conversations and GPT4 assessment are advantageous, while Alpaca's unique feature is self-instruction from the davinci-003 API. The open-source nature of both models adds value despite commercial use restrictions.