Meditron: a Responsible Medical GenAI Breakthrough

Oct 8, 2025

Meditron, arguably the world’s best open-source large language model (LLM) for the medical field, was recently released last week by researchers from EPFL Switzerland. It is built on Meta’s Llama 2 and is available in 2 model sizes – 7B and 70B – similar to Llama 2.

Meditron, arguably the world’s best open-source large language model (LLM) for the medical field, was recently released last week by researchers from EPFL Switzerland. It is built on Meta’s Llama 2 and is available in 2 model sizes – 7B and 70B – similar to Llama 2. Just 46.7B tokens (compared to 300B for GPT-3 family) including medical literature from PubMed, abstracts as well as internationally-recognized medical guidelines were used to train the model.

The model outperformed best public baseline by 6% absolute performance gain. Compared to closed-source models, it outperformed GPT-3.5 and MedPaLM while closely trailing GPT-4 and MedPaLM-2 by less than 10%. There were 4 primary benchmarks used for evaluation, that included MedQA from US Medical Licensing Examination, MedMCQA (a multi-subject multi-choice dataset), PubMedQA (Q&A on top of PubMed abstracts) and MMLU-Medical (derived from the widely used Massive Multitask Language Understanding dataset).

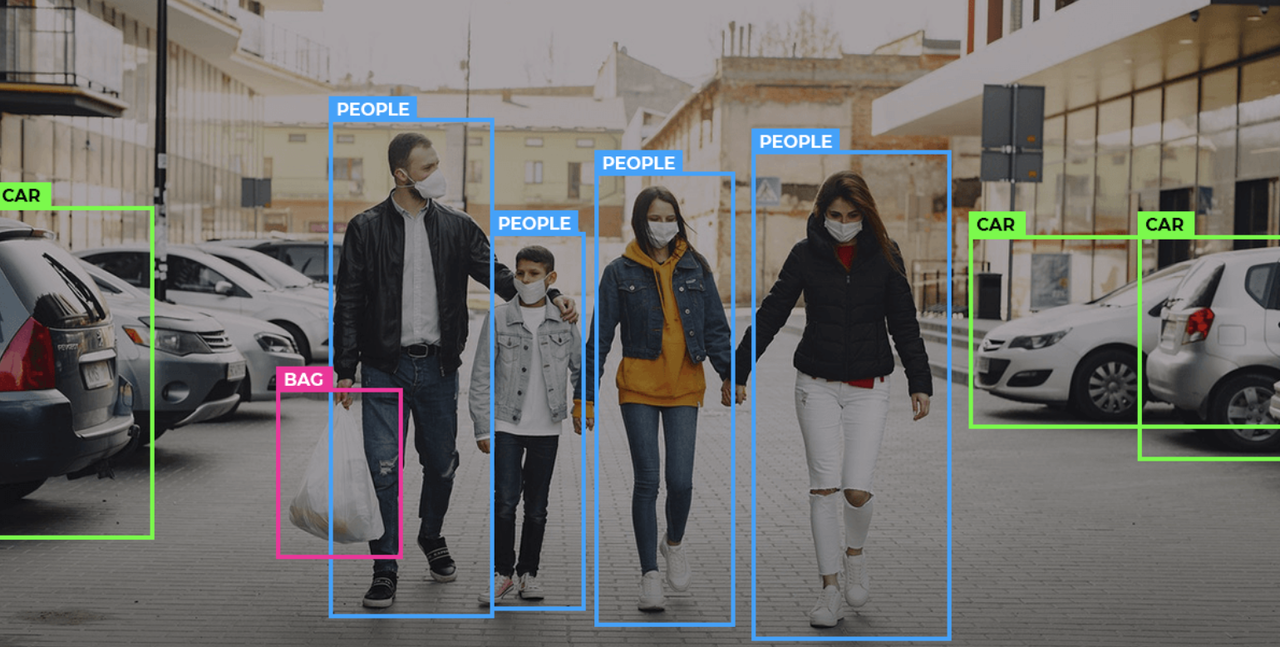

MediTron was trained on transparent sources of high-quality evidence such as the International Committee of the Red Cross clinical practice guidelines. This ensures that the model is able to deliver information safely and in an appropriate manner that’s sensitive to human needs. Since it is open-source, other researchers can further stress-test the model and make it more robust over time, something that would not be possible had it been a closed-source model.

All kudos to Llama 2, this new era of advancements of open-source LLMs in specific domains is all set to pick up pace and deliver technological "wows" like never before!